Organic Training Wins Everyday Over Synthetic and Hybrid Approaches

In the high-stakes arena of public safety, particularly in mitigating gun violence and active shooter incidents, the deployment of AI-powered visual gun detection systems is a game-changer. However, for security managers looking to deploy this technology, you should understand how the AI models of the solutions you’re vetting have been created. In fact, how an AI model is trained is directly linked to accurately detecting a weapon, which is what will ultimately buy you more time to help save lives in an active shooter situation. That is why this should be a top criteria for every security manager and systems integrator looking to deploy this technology.

There are several ways to train an AI model, including organically, synthetically or via a hybrid approach. The difference between the three basically comes down to whether the model was trained on “real life” scenarios or “pretend.” Public spaces are dynamic, with evolving scenarios that a gun detection system must promptly and accurately respond to. Organic data, with its real-life variances, trains the AI to adapt to these changes and recognize what is going on more accurately, something synthetic or hybrid models can struggle with no matter how large the data set.

Organic Training Data

This method consists of raw video footage captured directly from security cameras in various real-world indoor and outdoor settings such as schools, hospitals and busy public environments from around the world. It reflects the genuine complexities and nuances of everyday environments – from changing lighting conditions to diverse human behaviors. Organic data captures the unpredictability of real-life scenarios that a gun detection system must navigate such as understanding how different firearms might appear in various conditions. This type of training data enables the AI visual gun detection system to recognize threats more accurately, reducing the chance of false positives and missed gun detections.

Synthetic Data

This training approach leverages only computer-generated data created using advanced 3D animation and rendering techniques. 3D animation uses computer simulation platforms such as Unity or Unreal Engine that have become common in video game development. This method is helpful and useful when real-life data is limited. It can “kick start” a project and augment a dataset with images that are similar to what would be encountered in real-life. The problem with using Synthetic data is that it does not fully replicate the intricacies of real-world environments. Additionally, each frame of a computer-generated video is “perfect”, meaning it lacks the imperfections found in real camera streams. For example, imagine a camera in a dark room that has a light bulb in the field of view. The pixels looking at the light bulb will saturate, whereas the farthest reaches of the room that the light bulb illuminates will be dark. This can be modeled by a computer, but in real-life, each camera handles this scenario differently. Some cameras take multiple images with each frame at various sensitivity levels, then merge the images together to make a Wide Dynamic Range photo. This can be effective but can also lead to multiple edges on an object that is in motion within the frame. Variables such as frame rate, processing power, imager sensitivity and noise can all change the camera’s response. One could attempt to model and train on all of them, but that would lead to millions of images of the same room, creating an unbalanced database.

Another example of the shortcomings of synthetic methods is in the ways data is generated. Some methods entail modeling a firearm suspended in space and rotating it in every possible pose such that the network can learn how a 2D snapshot of a 3D object in 3D space appears in every orientation. The problem is that weapons floating in space will never be seen in the real-world. Weapons are always held or suspended by something, leading to occlusions that the described method does not model. Training on floating weapons can lead to the network developing dependencies on visual features that aren’t available in the real world.

Hybrid Solutions

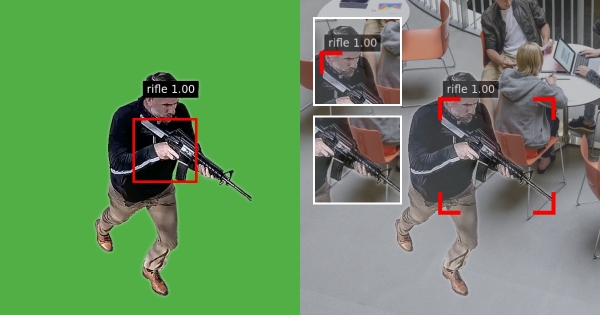

This method involves using real camera footage recorded against green screens to digitally alter backgrounds and synthetically swap out environments. Green-screening is a process whereby several cameras may be set up in a studio looking at a subject on a green backdrop and green floor. As described previously in the Synthetic Data section, Green-screening is insufficient for production systems because it inherently cannot match the real-world lighting, camera sensitivity and artifacts that the camera will typically produce. For example, in the real-world there might be shadows or bright rays of sunlight cast on the weapon due to a low sun angle. There also may be dust on the lens, or diffused light being reflected or cast onto the object from an accent wall or decorative lighting, or specular reflections off a surface of the weapon. If an AI visual gun detection system uses models trained through green screening, it will not be able to compensate as well for these common natural features that are present in everyday.

Simulated image, Omnilert will never employ green screen filming in training data.

Hybrid methods also don’t model interactions with the background. For example, the overlaid person with the weapon never picks up the telephone receiver on the desk in the office. They never move a chair or walk behind a desk. In fact, with the green screening method, the overlaid person must walk unnaturally on top of all the objects in the room, never behind the counter, or behind the translucent window at a clerk’s counter, nor do they create shadows or reflections in mirrored surfaces and windows. This leads to more situations that will never be seen in the real-life. Without learning what it looks like to interact with the environment in real-life, the network has a large gap in “understanding” so that when someone picks up an object from the background, false alarms may occur.

Hybrid training attempts to blend the authenticity of real footage with the controlled variability of synthetic environments. However, while aiming to combine the best of both worlds, hybrid solutions often face challenges in seamlessly integrating real and synthetic elements, which can affect the overall performance and reliability of the AI system.

Omnilert is Grounded in Real-World Training

An organic approach models a human’s learning approach. We, as humans, are also limited in our “datasets”. We cannot experience every object in every lighting condition, but we don’t need to. By experiencing a representative sample, we learn the relationship between lighting and its effects on illuminated objects, including the shadows, reflections and visual artifacts that the lighting casts. We learn depth and object geometries. This understanding allows us to make valid assumptions of what an object is, even when we find it in an unfamiliar environment. Similarly, a neural network that is carefully trained on a representative sample of valid scenarios is more likely to have similar “understanding” that can generalize to scenarios it was never trained on. This requires a balanced dataset with representative samples captured from sequences of real-life scenarios from real-life cameras.

Omnilert’s dataset has grown over the last 6 years with data collected from thousands of real-world cameras in real-world scenarios where both weapons as well as common, every-day objects have been captured in video. Each image in the dataset has been carefully selected to model a given scenario that needed attention. Each annotation has been reviewed multiple times to ensure 100% accuracy and consistency in annotation methodology. This bespoke method has led to what we feel is the world’s best performance in visual weapons detection as well as an extremely low false alarm rate in real-world scenarios.

Omnilert’s expertise in AI has roots in the U.S. Department of Defense and DARPA, specifically in real-time target recognition and threat classification. That military focus on high reliability and precision carried through to the development of Omnilert Gun Detect that goes beyond identifying guns to finding active shooter threats. The system does not use synthetic data generation and once deployed, it is continually learning in the environment it is operating in by using real-world data captured from onsite cameras.

Commenting on the accuracy of Omnilert Gun Detect, Steve Morandi, Vice President of Product at Evolv Technology, stated, “We tested the Omnilert Gun Detect analytics using many kinds of guns, in different environments inside and outside, and under varying lighting conditions. Throughout all our testing, we were very impressed with the accuracy of the analytics and low false positive rate.”

Real World or Pretend. What’s in that AI System?

In a potential active shooter situation, the margin for error is negligible. Security teams depend on the reliability of gun detection systems to make life-saving decisions. Systems that rely on synthetic data can have a detrimental effect to the accuracy of the detection as most synthetic data can never be reproduced in the real-world, and most real-world data cannot be reproduced synthetically. When a training database is weighted too heavily with synthetic and hybrid data, it is difficult to fine-tune with real data due to the disproportionate number of examples between the synthetic and the real data.

Organic data ensures that the AI is well-versed in the complexities of real-life situations, making it more dependable in critical moments. And as we continue to harness the power of AI in critical security applications, grounding our technology in the realities of the world it's meant to protect will always be the best way to train a model to ensure it provides quick and accurate detection when we need it most.